Thinking Inside the Box

How Constraints Turned into a DevOps Superpower

4/6/20254 min read

Ever start building something cool, then quickly realize you might just be tripping over your shoelaces? Yeah, me too. In fact, that's exactly what kicked off my latest round of back-and-forth with my AI sidekick.

Here's the short version: I'm trying to set up a smooth, scalable Plex server that's friendly enough to stream movies to a dozen users simultaneously. Sounds simple enough, right? Wrong (as usual). Like many of you innovative homelabbers know, "simple" rarely applies once 4K streams, transcoding, remote clients, and WiFi-connected smart TVs enter the picture.

My trusty ThinkPad T14s has been valiantly chugging along, Plex installed and running directly on top of my Kubernetes-based homelab architecture. Yet, lately, Plex has felt less 'Netflix meets NASA' and more 'donkey pulling a spaceship.' Alright, that analogy was rough—but you get the picture. Streams were lagging, systems were stalling, my frustration level was rising, and popcorn was going sadly cold. Clearly, something had to change.

It’s precisely these moments where I'm grateful that my project funds aren't exactly unlimited (hey, constraints are secretly a founders' best friend—hear me out). Having deep pockets can let you leapfrog past critical knowledge gaps prematurely, missing an essential truth I've learned: The quality of an application experience can never exceed the capabilities and robustness of the foundations beneath it.

This nugget of hard-earned wisdom popped up right in the middle of my AI-assisted brainstorming sesh and hit me like a perfectly executed git merge (git magic --epiphany). Suddenly, problems crystallized. Plex wasn’t struggling because my drive throughput was too slow or due to networking misconfigurations. It struggled for a more fundamental, CPU-hogging reason: transcoding.

Transcoding, for the uninitiated, is Plex’s process of converting movies from a pristine, original, beautiful 4K file down to lower resolutions or different codecs to suit viewers’ hardware—like that old Roku (“why won’t you direct play?!”). Turns out, my ThinkPad—a wonderful laptop for most daily tasks—was neither NASA nor Netflix-ready for real-time conversion demands. Watching CPU usage sit permanently at 100% was a bit like watching a small hamster spinning unsustainably fast on a wheel. Cute? Sure. Reliable? Not exactly.

Now, here’s where it gets interesting. Those constraints I mentioned earlier? They forced me to rethink how to scale. Rather than buy one hulking system to brute-force the whole setup, I considered adding multiple modestly powerful HP ProDesk mini PCs to form a Proxmox clustering system. These affordable little systems won't exactly dominate the transcoding Olympics, but they absolutely excel at distributing lightweight Kubernetes-managed tasks, handling DNS, network management, and reliably automating deployments. By leaning into these scaled-down, modular units, I can now clearly see how cluster-aware, pattern-based infrastructure sets an invaluable foundation—leaving my eventual, inevitable GPU-powered transcoding beast free to tackle precisely the specialized workloads it thrives on. Smart spending equals strategic specialization.

It's all about working smarter, not harder. My GitOps-powered Kubernetes setup exemplifies this beautifully: by implementing clear, reusable configurations—and having Kubernetes elegantly handle things like IP addresses (“thank you Kubernetes for killing manual complexity!”)—I can spin up complete app stacks (like my media-server "arr stack," with Radarr, Sonarr, Jellyfin, the whole nine yards), replicated with just a few commits. What might take traditional IT teams weeks or months is reduced to literally minutes or hours. Write once, scale infinitely. Now that's DevOps poetry.

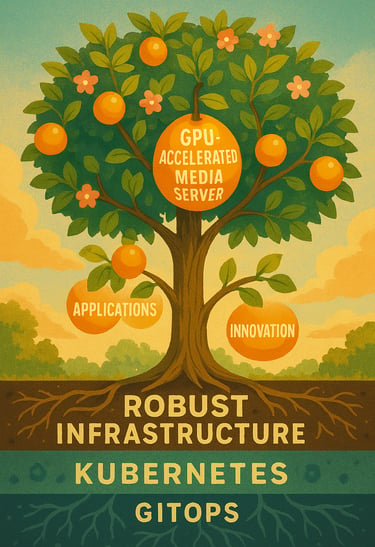

Ultimately, the true magic revealed itself through this journey of discovery—it's not about buying our way to success, but about deliberately exploring limitations and constraints to gain a deep foundational knowledge. Only once I deeply understand root-layer infrastructure (shoutout to Kubernetes, Flux, Helm, Ansible) can I confidently invite complexity into my system at precisely the points it's genuinely helpful—not earlier, not later. Infrastructure roots come first, the branches (like specialized GPU transcoding) grow later, naturally and efficiently upward.

This realization isn't just about technology—it's a mindset, a personal growth hack, and a fundamental approach to solving life's bigger puzzles. It's seeing infrastructure not as something we abstract away and conveniently ignore but as our project's very heartbeat—its pulse literally powering all our creativity, innovation, and ideas. We ignore it at our own peril.

Maybe the biggest lesson here? Quality is built from within. If we commit to thoughtfully understanding and improving foundational infrastructure, everything layered on top—apps, experiences, creativity, growth—benefits exponentially. It's empowering, strategic, sustainable, and profoundly exciting.

So, next time you're frustrated by lagging streams, failing workloads, or cold popcorn, ask yourself: what's really beneath my problems? Is my infrastructure truly ready to scale? Usually, the best solutions don't require buying bigger toys—they involve aligning smarter insights and clearly structured foundations first.

Stay tuned, my fellow home-innovators, dreamers, Kubernetes wranglers, and container magicians—we're just getting this infrastructure party started!

Until next time, keep your NAS humming and your clusters clustering. See you on the command line, friends! 🍿✨🚀